Workflow-Level Data Quality:

A Smarter Approach to Governance

As the amount of data, speed, and variety continue to grow, the problem of poor data quality has evolved from a technical issue to a material concern. Organizations continue to spend significant amounts on analytics and AI, but these projects will fail if they are founded on bad data. Studies have shown that the cost of poor data quality to organizations is in the millions every year.

The root problem is not the lack of tools but the outdated approach to governance. When data quality is a manual process or an afterthought, problems are identified too late—in other words, after they have already impacted dashboards, models, and business results. The modern data landscape demands a shift from reactive problem-solving to proactive, workflow-level data quality that happens on a continuous cycle.

Poor data quality is the primary reason for 60% of business failures.

Gartner

Why Manual Data Quality Checks No Longer Scale

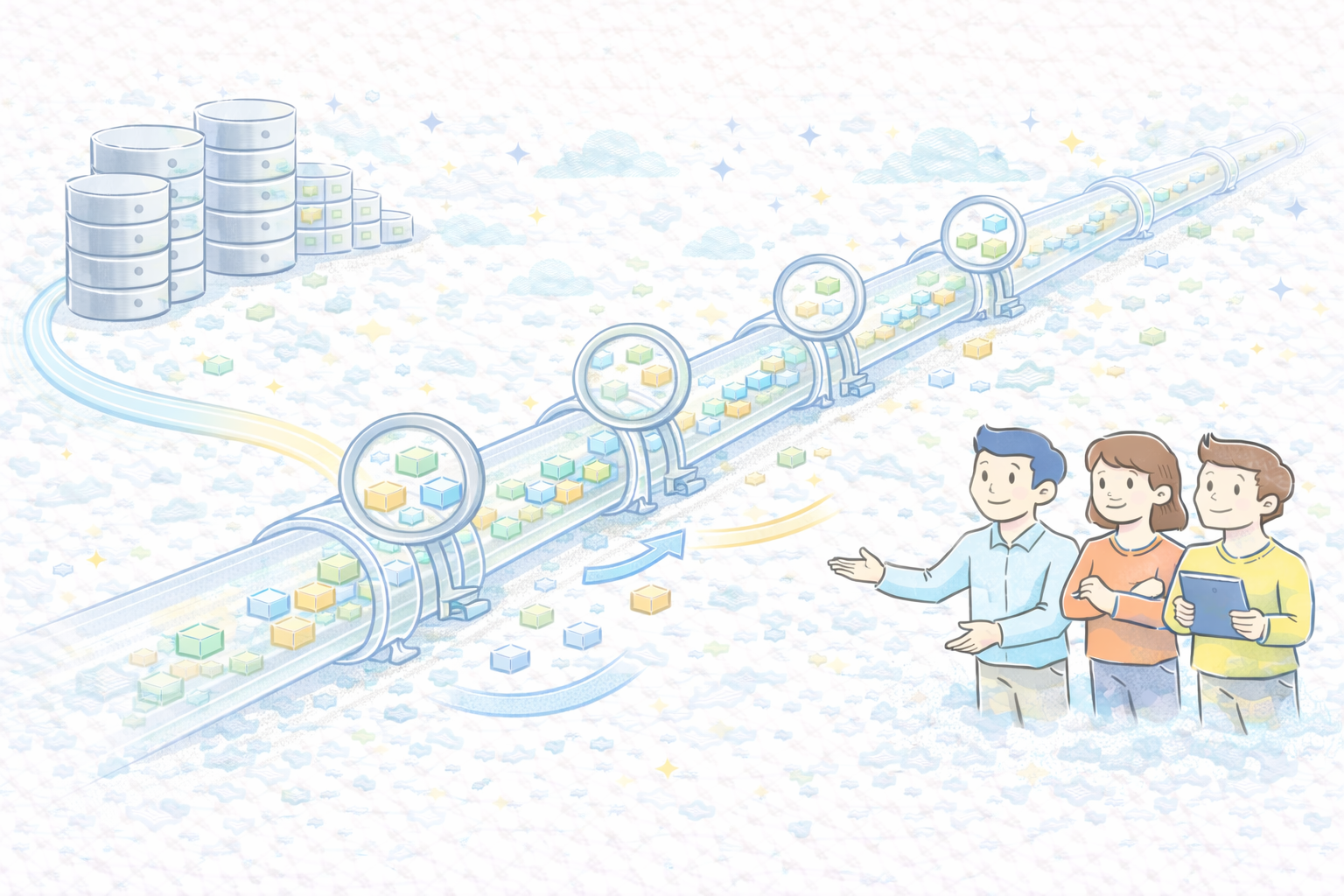

In today’s data environment, data is constantly flowing from a wide variety of sources such as cloud infrastructure, operational systems, APIs, and IoT devices. The volume and velocity of data make it impossible to validate manually. Manual validation cannot keep up with the ingestion or transformation process.

For the purpose of maintaining data integrity, validation needs to be integrated into the workflow itself. Quality checks need to be automated as soon as the data is input into the system and at every step of the transformation process. Without this level of automation, teams would be working in the dark, identifying issues only after the data has been consumed.

Understanding Data Quality Beyond Accuracy

Data quality is often considered synonymous with accuracy, but accuracy is not enough. A good data system must consider several aspects that will help determine whether the data is trustworthy.

The key dimensions are:

- Accuracy: ensuring the data is a correct representation of actual values

- Completeness: finding missing or null data that skews analysis

- Consistency: ensuring consistent formats and definitions among systems

- Timeliness: making sure that the data is up-to-date and accessible when required

- Reliability: maintaining data integrity throughout processes and over time

Among these, timeliness has emerged as a highly important factor. In a world where live streams, financial transactions, or IoT data are the driving forces, any delay, no matter how small, can lead to outdated insights and make decisions based on those insights incorrect. These factors can be measured and monitored at the workflow level.

Why AI Is Becoming Essential for Data Quality

Artificial intelligence is commonly viewed as a data consumer, but it is becoming an important facilitator of data quality. Machine learning algorithms have the ability to look at historical trends and metadata to detect anomalies and predict data problems before they occur.

Through the use of AI in data workflows, companies can predict the quality of their data. Instead of reacting to problems, data pipelines can indicate or even fix problems automatically. This will prevent problems from becoming issues that require engineering resources to solve.

DataOps and the Shift to Shared Ownership

Technical automation by itself is not enough to ensure the quality of data over time. Governance is effective when it is enabled by an operating model that is collaborative. DataOps, based on the principles of DevOps, focuses on continuous monitoring and shared responsibility.

In a DataOps culture, data engineers, analysts, and business users come together to determine what constitutes quality and measure results. This way, the quality of data is ensured throughout the data life cycle, rather than at a point in time. When teams collaborate around open workflows, trust in the data increases.

The Governance Challenge of Modern Data Sources

The rise of NoSQL databases and data streams from IoT sources has brought about new challenges in data governance. These databases often do not have strict schemas, making it more difficult to enforce consistency and audit usage of data.

To deal with this, there is a need for governance approaches that take into consideration the distributed and semi-structured nature of the data. At the workflow level, with scalable processing architectures, organizations can enforce quality and security policies even when the environment is not traditionally structured.

Embedding Governance Directly into Workflows

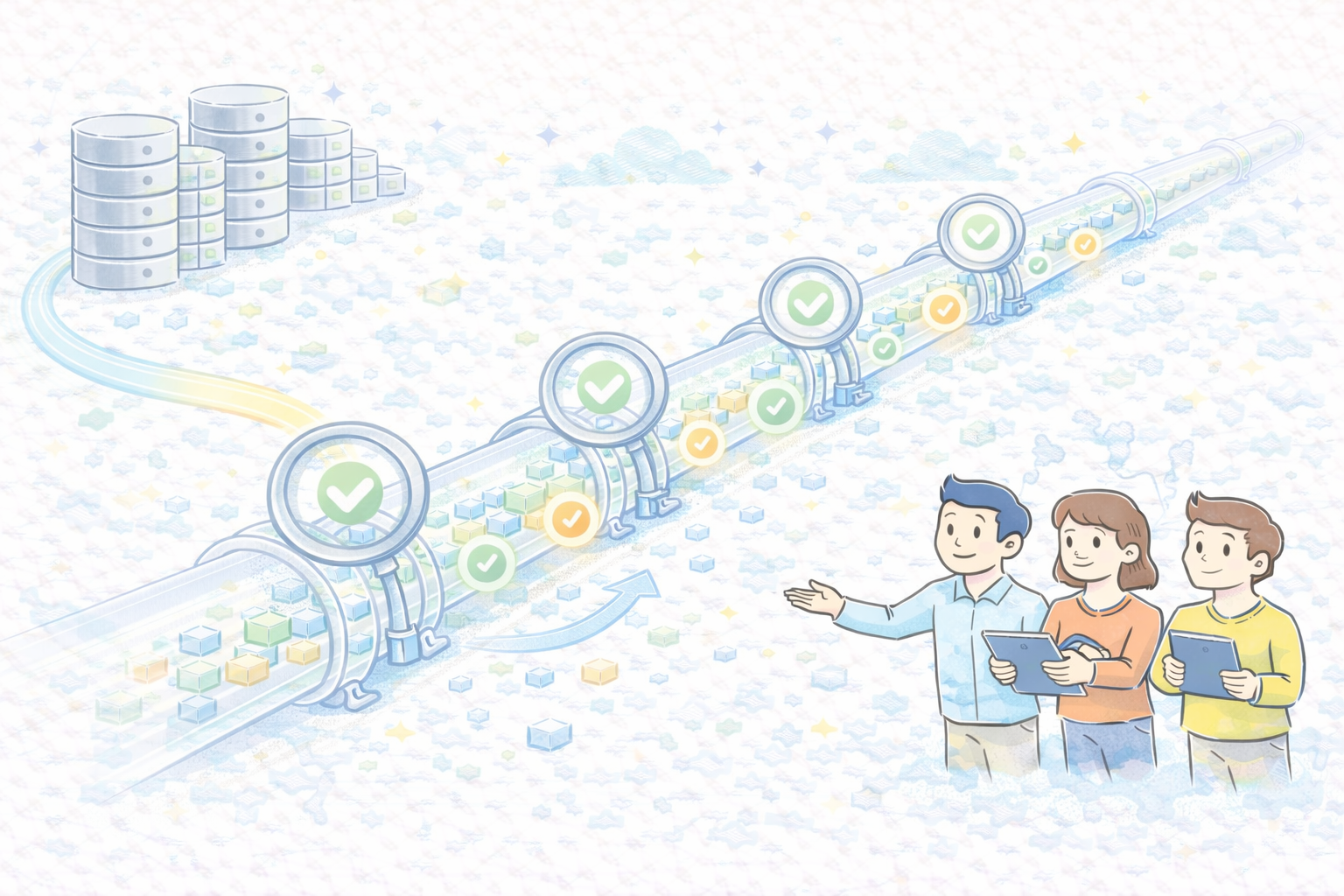

Governance is most effective when it is integrated rather than isolated. When governance is treated as a distinct approval process, it hinders growth and encourages people to find ways to circumvent the system. Governance should be integrated into the workflow through automated checks and policy enforcement.

Through the integration of governance into CI/CD pipelines, it is possible for organizations to ensure compliance requirements are met while also allowing teams to maintain the speed at which they are working.

Conclusion: Data Quality as a Strategic Asset

The future of data-intensive enterprises will be shaped not by the complexity of their algorithms, but by the quality of their data. Data quality at the workflow level turns governance from a bottleneck to an enabler, ensuring that the data remains trustworthy as it flows through more complex systems.

Those organizations that focus on automated quality checks, shared ownership, and integrated governance are laying a strong foundation for analytics, AI, and growth. The question is no longer whether data quality is important, but whether organizations are ready to treat it as a strategic asset rather than a regular fire drill.

After reading this, can you clearly see where data quality is enforced, monitored, and corrected within each workflow?